The Salesforce Tower in San Francisco stands fifty-nine floors tall with 1.35 million square feet of office space (the below image is created entirely using AI from Bing on Chat GPT). It was completed in 2018 at a cost of USD 1.1 billion as a very visual crowning moment in the rise to power of Software as a Service (SaaS) and its godfather, Marc Benioff. The building epitomised the third generational wave of technology disruption that saw the smartphone as catalyst to putting computers in the hands of billions of users. The network effect derived from that under Metcalfe’s Law created some of the world’s biggest companies in just over a decade – Amazon, Google, and Meta. Apple and Microsoft were also huge beneficiaries although they were companies from the second generational wave (client server – the desk top era).

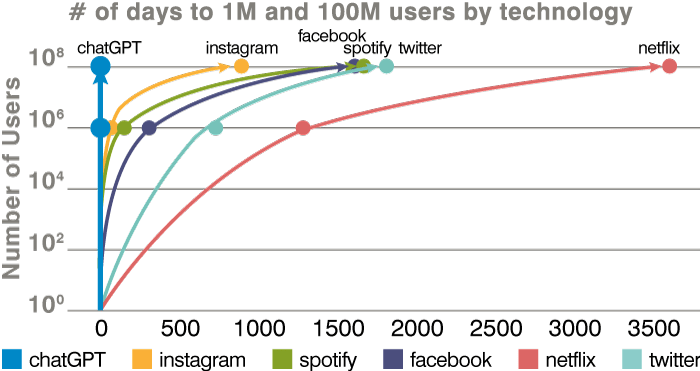

Yet today, one-third of the Salesforce Tower stands idle, the equivalent of 18.4 million square feet of office space. One could be forgiven for thinking that the technology wave had come to an abrupt end, yet nothing could be further from the truth. The launch of Chat GPT in November 2022 has unleashed what we term Digital 4.0 – the fourth disruptive wave – more far reaching than any of its predecessors. We believe this wave will profoundly change the foundations of every business without the need for the physical opulence that went with its predecessor. AI, together with masses of data, faster networks and the connectivity of everything, promises to accelerate the pace of disruptive change at exponential rates. With each new technology comes greater and greater speed and adoption. Chat GPT shows just that (see Figure 1).

Figure 1: Number of days to 1 million and 100 million users by technology

Following quickly on the heels of the Chat GPT launch were the Q1 2023 (April end) results from Nvidia. These were so spectacular that they have fired the starting gun in AI investing. A USD 4 billion increase in Q2 guidance for revenues taking the total from an expected USD 7 billion to USD 11 billion marks perhaps the biggest upgrade to a company operating at scale in the history of technology. It shows clearly that we are far from the end of disruption and that in spite of the creation of so many huge new companies, the world continues to see innovation orders of magnitude higher at every turn. The reason for the Nvidia raise was demand for large language models (LLM) the core of AI applications. Nvidia’s newest chipsets can cost upwards of USD 30,000 each with a large scale LLM costing as much as USD 300 million. With most of the world’s companies realising the need for AI, demand is just at the beginning. We were onsite at the Nvidia offices in California the morning after this huge earnings beat and for Nvidia, Chat GPT was “the iPhone moment for Digital 4.0”.

Hype versus reality

Technology titans have been quick to make the scale of change clear. Sundar Pichai, CEO of Alphabet, said, “I’ve always thought of AI as the most profound technology humanity is working on – more profound than fire or electricity or anything we’ve done in the past”. In trying to understand the scale of change and the way in which it will impact our lives, it is important to consider how we must adapt. In education, for example, leaders have been quick to condemn Chat GPT as an unacceptable tool that students can use ‘maliciously’ to pass exams with flying colours. It is true that it is exceptional in this field – the most recent release Chat GPT4 passed the US bar exams with 98% but we believe this misses the point. Bill Gates frames it perfectly in stating that “education needs to shift towards enhancing individual perceptions and creativity rather than fact learning; that Chat GPT passes exams is more a reflection on exams than information on Chat GPT”. The point is that this is here, it is now and it is not going away. We need to adapt – in the corporate world, the winners and losers will be defined by that adaption and it will need to be at speed. Hence the scramble for AI capability and the huge investment in infrastructure – Nvidia GPU chipsets.

There has clearly been hype surrounding the rapid changes since the launch of Chat GPT but the impacts are becoming very real. We see this breaking into two primary buckets – the enablers who manufacture the chipsets and the surrounding infrastructure to create these LLMs and the end use cases. The former is clear; it is here and it is happening at pace. The latter is less clear and definitely prone to hype and misinformation. To give one example, companies like Palantir and C3.ai have seen their share prices rocket recently. Year-to-date, Palantir is up 129% and C3.ai is up a staggering 292% (to end May 2023). Both companies have an AI angle but more as consultants rather than scalable solutions businesses; just like the addition of the .com letters to companies back in the late 1990s, the appearance of AI in the company name seems to ensure instant share price action!

What will we do with it?

End use cases for AI will extend across all industries but some will become apparent quicker than others. We believe that some of the early use cases will be in the following areas:

- Healthcare – drug discovery (AI can already discover possible drug compounds faster than humans)

- Chatbots – customer support will become significantly more dynamic and effective

- Copywriting – articles are already being written using LLMs that are more than a match for human writing at a fraction of the time and cost

- Advertising – targeted advertising will become vastly more efficient with AI

- Coding – one of the interesting areas of discovery is that LLMs can write great code and de-bug existing code very well

- Manufacturing – the automation of manufacture though AI led robotics will be substantial

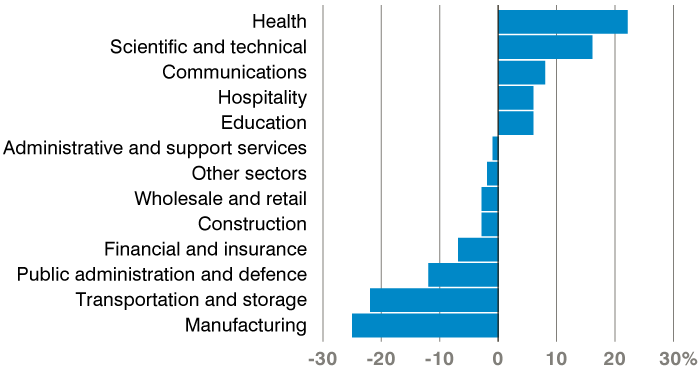

Figure 2: How AI could change the job market

All of the above areas of disruption are labour intensive and we believe there will be significant displacement of labour while new jobs will be created in multiple industries at the same time. This has been highlighted in a number of pieces of research. Goldman Sachs estimates 300 million jobs will be lost to AI by 2030 while McKinsey has a bear case number as high as 800 million. We can see many examples of increased productivity through the use of AI assistants. Think of a team of solicitors working on conveyancing, for example. The work of 10 lawyers and paralegals could probably be easily condensed to five lawyers and five digital assistants. This type of change can extend across many industries.

The impacts will not only be felt in cost savings and margin enhancement. McKinsey again cites a potential USD 13 trillion benefit to global GDP by 2030 with sectors such as manufacturing, healthcare and retail set to be most affected. This equates to a 1.2% annualised effect.

Regulation

Rarely in the corporate world do we see industry leaders calling for regulation, but AI has become one area where the warning of potentially dire consequences ring loud. As recently as this month, more than 350 AI professionals – including Sam Altman and the “godfathers” of AI – signed a one-sentence statement published by the Centre for AI Safety that said, “Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.”

AI regulation is a complex and evolving topic. There is no one-size-fits-all approach, as the best approach will vary depending on the specific context. However, there are a number of common principles that are emerging as important for AI regulation. These include transparency, accountability, fairness and safety. Leading AI developers are increasingly calling for regulation of AI. For example, Elon Musk has said that “AI is potentially more dangerous than nuclear weapons” and that “we need to be very careful about how we develop AI.” Yann LeCun, the chief AI scientist at Facebook, has said that “we need to regulate AI before it’s too late.”

Governments seem to understand the need, but it is unclear how this will be achieved or who will have the skillsets to manage it effectively. At the same time, companies are playing a proactive role. Nvidia has launched NeMo Guardrails, their software product for developing trustworthy LLM conversational systems.

Summary

AI is here and its very real. It needs significant inputs and support from a proliferation of data, fast network speeds and connectivity. This will support the build out of the ecosystem from chipsets to networking products, connected devices and storage. There are multiple ways to gain access to these factors aside from the markets most obvious desire to own Nvidia. End use cases will be many, but the winners and losers list is not easy to define at present. Investment banks have been quick to assemble AI short baskets, but we often find names we think of as AI winners in those baskets. Time will tell but it does seem clear that software will continue to win in this environment. A recent interview with Cathie Wood at ARK suggested that for every USD 1 of hardware that Nvidia sells, there will be USD 8 spent on software.

Figure 3: Global Artificial Intelligence (AI) Software Market

The magnitude of this spend may be open to question but the direction of travel is not. We have been talking about the Digital 4.0 super wave for some time – while fermenting in the background for some time, we believe that the launch of Chat GPT is the iPhone moment for Digital 4.0. Investment and opportunity is set to explode. So what of San Francisco office space? Unless it can be converted into the offices of AI – data centres – it is likely to be a permanent legacy to the last wave.